Beyond

UC

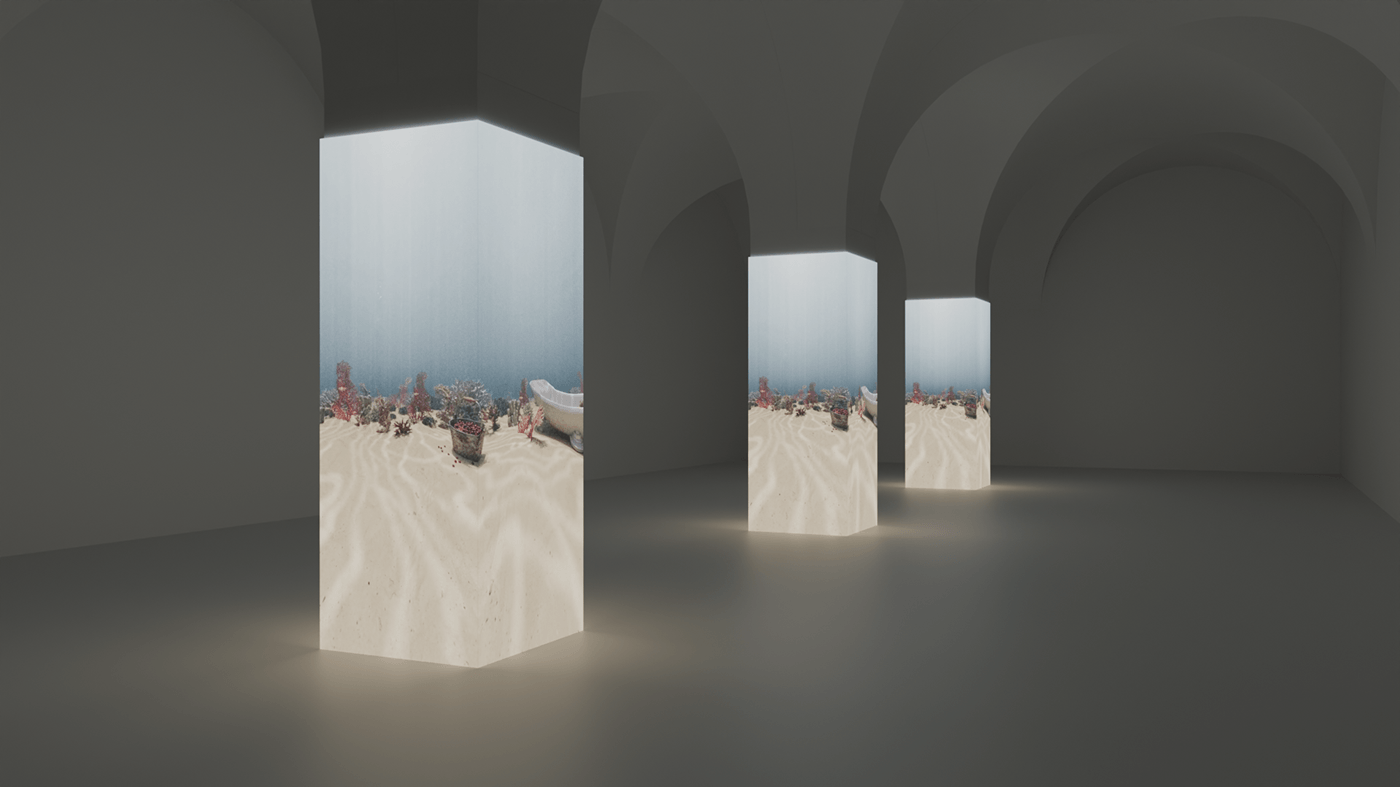

“Beyond” is an interactive installation that connects the real world to the virtual world through Artificial Intelligence and immersive technologies. The experiment is based on a conversation between the user and a virtual entity, using a voice cloned from an initial recording of the user reading a text. In parallel, the virtual room is transformed in real time with the dynamic insertion of 3D models generated from specific moments of the conversation, whose visualization adapts to the user's eye position in the physical space.

Initially, several outstanding projects from the exhibition “Cybernetic Serendipity,” held at the Institute of Contemporary Arts in London in 1968 and curated by Jasia Reichardt, were analyzed. This exhibition, a pioneer in the articulation between art and technology, served as a historical reference for understanding how artists began to explore computers, feedback systems, and human-machine interaction.

Based on some selected projects, various ideas were explored for the development of the work, seeking to update those principles for the contemporary context. This research process helped define which technological and conceptual approaches would make the most sense for the final project.

That's when the idea for the "Beyond" project emerged, an interactive installation that creates a virtual room responsive to the user's voice and presence. After reading a text for voice cloning, the user begins to dialogue with a bot that responds with that same voice, using the OpenAI API. In parallel, excerpts from the conversation generate keywords that introduce 3D objects obtained from Sketchfab, while a Kinect v2 updates the perspective in real time, bringing the experience closer to a CAVE-type system.

During the prototyping process, we prioritized implementing conversation with artificial intelligence, using the OpenAI API to generate real-time responses, processing any user question in just a few seconds.

After stabilizing the dialogue, we moved on to voice cloning, discovering ElevenLabs, a company specializing in speech synthesis. After the user reads an initial text, the audio is sent to the ElevenLabs API, which clones the voice in a few seconds for later use in the artificial intelligence's responses.

To enrich the visual experience, we developed the virtual room using different approaches. Initially, the background changed dynamically via images generated by DALL-E 2 based on the conversation, but this 2D solution proved repetitive, leading us to adopt 3D objects.

Using keywords extracted from the dialogue via the OpenAI API, we integrated 3D models from Sketchfab, randomly positioned in the room. Additionally, a Kinect v2 implements a CAVE-type system, adjusting the perspective in real time to the user's movements and creating a complete interaction between cloned voice, conversation, and responsive environment.

Initially, a physical structure with two side walls, a ceiling, and a front curtain was considered to accommodate the virtual room projection. This solution proved unfeasible due to high costs and spatial limitations.

For the first demo version, a compact 2x2 m structure was chosen, with a wooden frame and greenhouse plastic screen. A rear projector, microphone for voice capture, headphones, and Kinect v2 for the CAVE system were included.

“CAVE AUTOMATIC VIRTUAL ENVIRONMENT”

The virtual reality cave: Behind the scenes at KeckCAVES.

Accessed on Feb 17th 2023, at: https://youtu.be/yF9gImZB1eI

Virtual Reality Cave - for the CIREVE & Université de Caen Normandie.

Accessed on Feb 19th 2023, at: https://youtu.be/iik95LoBGxI

KAVE: Building Kinect Based CAVE Automatic Virtual Environments.

Accessed on Feb 19th 2023, at: https://youtu.be/UU1MrGeKP0E

Kageyama, A., Ohno. N., Kawahara, S., Kashiyama, K. e Ohtani H (2013, 25 de Janeiro).

Immersive VR Visualizations by VFIVE. Part 2: Applications.

Accessed on Feb 23rd 2023, at: https://arxiv.org/abs/1301.6008

Cavallo, M. e Forbes, A.G. (2018, 14 de Setembro).

CAVE-AR: A VR Authoring System to Interactively Design, Simulate, and Debug Multi-user AR Experiences. Part 2: Applications.

VOICE CLONING

Jia, Y., Zhang, Y., Weiss, R.J., Wang, Q., Shen, J., Ren, F., Chen, Z., Nguyen, P., Pang, R., Moreno, I.L. e Wu, Y. (2019, 2 de Janeiro).

Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis.

Accessed on Feb 17th 2023, at: https://arxiv.org/abs/1806.04558

Kalchbrenner, N., Elsen, E., Simonyan, K., Noury, S., Casagrande, N., Lockhart, E., Stimberg, F., Oord, A.V.D, Dieleman, S. e Kavukcuoglu, K. (2018, 23 de Fevereiro).

Efficient Neural Audio Synthesis.

Accessed on Feb 17th 2023, at: https://arxiv.org/abs/1802.08435

Real-Time Voice Cloning Toolbox.

Accessed on Feb 17th 2023, at: https://youtu.be/-O_hYhToKoA

3D CLONING

Aitpayev, K. e Gaber, J. (2020, Abril).

Creation of 3D Human Avatar using Kinect.

Accessed on Feb 28th 2023, at: https://www.researchgate.net/publication/340550098_Creation_of_3D_Human_Avatar_using_Kinect

Zhang, G., Li, J., Peng, J., Pang, H. e Jiao, X. (2014, 14 de Outubro).

3D human body modeling based on single Kinect.

VARIED REFERENCES

Holograms Made With Smoke and Projectors.

Accessed on Feb 17th 2023, em: https://www.popularmechanics.com/technology/gadgets/a25658/spooky-holograms-with-smoke-and-projectors/

JOÃO LOURENÇO - SONUS TYPEWRITER.

Accessed on Feb 17th 2023, em: https://jocamalou.com/sonus-typewriter/

LIGHT BARRIER – kimchi and chips.

Accessed on Feb 17th 2023, em: https://www.kimchiandchips.com/works/lightbarrier/

3D Multi-Viewpoint Fog Projection Display.

Accessed on Feb 17th 2023, em: https://youtu.be/yzIeiyzRLCw

Blind Light: High art, low visibility - Antony Gormley’s.

Accessed on Feb 17th 2023, em: https://publicdelivery.org/get-lost-in-antony-gormleys-mist-room-blind-light/

@c - a project by Pedro Tudela & Miguel Carvalhais.

Accessed on Feb 17th 2023, em: https://at-c.org/installations/

@c Installations Sulco (Medida de Corte).

Accessed on Feb 17th 2023, em: https://www.at-c.org/installations/sulco.php

The Voxon VX1.

Accessed on Feb 17th 2023, em: https://voxon.co/voxon-vx1-available-for-purchase/